JSON to TOON Format: How It Reduces Token Usage and Speeds Up LLMs

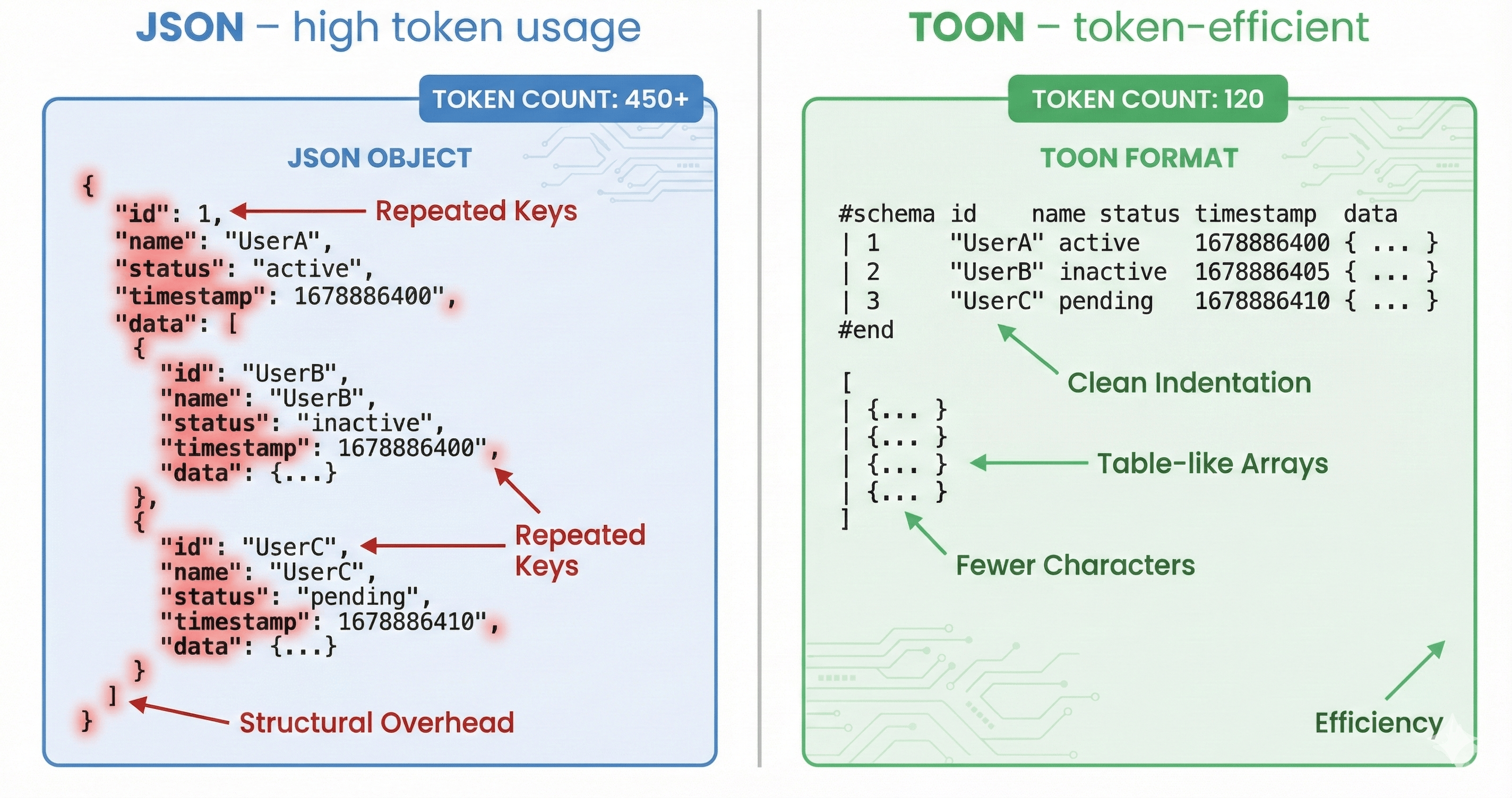

In the age of AI and large language models (LLMs), structured data is everywhere, and JSON has long been the standard for organizing it. But when feeding JSON directly into LLM prompts, every brace, quote, and repeated key adds extra tokens—slowing responses, increasing costs, and reducing usable context. That’s where TOON format comes in.

By using a JSON to TOON converter, you can transform standard JSON into a TOON format that is compact, human-readable, and optimized for LLM prompts. This approach reduces token usage, improves model comprehension, and streamlines AI workflows. Whether you’re handling user data, product catalogs, or event logs, adopting JSON TOON ensures faster, more efficient AI processing while keeping your data structured.

In this article, we’ll explore why JSON to TOON format is the future for LLM prompts, provide real-world examples, and show how the TOON format beats JSON in efficiency, readability, and AI performance.

What Is TOON ? (Token-Oriented Object Notation)

TOON ( Token- oriented Object Notation) is a toon format format designed for one purpose:

minimizing token usage when sending structured data to large language models (LLMs).

Unlike traditional JSON—which waste excessively on braces, quotes, and punctuation.

TOON removes unnecessary symbols such as:

- Quotes

- Curly braces

- Commas

- Redundant key repetitions

- Deep indentation

- Excess punctuation

Instead, TOON uses a clean, table-like structure where:

- The first line defines the header

- Each following line represents a row

- Nesting is handled through indentation only

- Values are separated by pipes | or tabs

TOON Example

id | name | role | status

101 | Sarah | admin | active

102 | Omar | editor | inactive

103 | Lily | admin | active

JSON Equivalent

[

{ "id": 101, "name": "Sarah", "role": "admin", "status": "active" },

{ "id": 102, "name": "Omar", "role": "editor", "status": "inactive" },

{ "id": 103, "name": "Lily", "role": "admin", "status": "active" }

]Even minified JSON is still much heavier than TOON.

Why JSON Format Wastes Tokens in LLM Prompts?

JSON was built for machine-to-machine communication—not for tokenized language models. LLMs tokenize every little character, meaning JSON’s structure becomes expensive noise.

Where JSON wastes tokens:

- {} braces

- "" quotes

- , commas

- : colons

- Repeated keys

- Whitespace + indentation

- Brackets []

- Structural overhead

Result:

- More tokens

- Higher cost

- More attention usage

- Less space for meaningful data

- More parsing errors

- Worse accuracy

LLMs prefer dense, signal-rich input, and JSON is full of structural “noise”.

Why TOON format was built (and when to use it)

The AI community realized that traditional formats like JSON and YAML are not optimized for LLM tokenization. Their syntax-heavy structure becomes a bottleneck when working with large datasets.

TOON was created to solve exactly these problems:

- Reduce token use

- Improve model understanding

- Remove formatting noise

- Increase the density of meaningful content

It's not a replacement for JSON everywhere—it's a prompt-level optimization layer.

TOON vs JSON: Key Differences and Token Impact

Structural Differences

| Feature | JSON | TOON |

| Quotes | Requiredeverywhere | None |

| Braces {} | Required | None |

| Commas | Required | None |

| Repeated Keys | Always repeated | Declared onceIn header |

| Token Cost | High | Low |

| HumanReadability | Medium | High (table-like) |

| Optimized forLLMs | No | Yes |

| Format Style | Verbose syntax | Clean and minimal |

Why TOON wins for LLM prompts

- Uses fewer tokens

- Easier for models to parse

- More compact

- Higher signal-to-noise ratio

Competitive Comparison (TOON vs JSON)

| Feature | TOON | JSON |

| TokenEfficiency | 30–60% fewer tokens onUniform datasets | Verbose syntaxinflates tokens |

| Readability | Human-friendly, table-likelayout | Heavy structuralpunctuation |

| Ideal UseCase | Large uniform arrays(products, logs, RAG chunks) | Highly nested orirregular data |

| Data Fidelity | 100% lossless whenconverted with tools | Native storageformat |

| Ecosystem | Emerging | Universal |

| PerformanceIn LLMs | Higher parsing accuracy | More confusion +structural noise |

| Limitations | Not ideal for deeply nesteddata | Handles nestingwell |

Why TOON saves tokens

Because:

- Keys appear once instead of in every row

- No structural punctuation

- Rows are denser

- Values are tokenized in sequence

For large prompts, this is a massive saving.

Real Token Savings Example

- JSON (minified) {"id":1,"name":"Sara","role":"admin"} → ~30 tokens (approx.)

- TOON id | name | role

- 1 | Sara | admin

- → ~8 tokens (approx.)

Savings:~70% tokens

Python developers can easily convert JSON to TOON format using popular libraries. Here’s a simple example:

import json

from jsontotoon import convert_to_toon

# Sample JSON

data = [

{"id": 101, "name": "Sarah", "role": "admin", "status": "active"},

{"id": 102, "name": "Omar", "role": "editor", "status": "inactive"},

{"id": 103, "name": "Lily", "role": "admin", "status": "active"}

]

#Convert JSON to TOON

toon_data = convert_to_toon(data)

print(toon_data)

This snippet demonstrates how Python can handle json to toon conversion seamlessly, preserving data while reducing token usage for LLM prompts.

Token counts vary by tokenizer; these are approximations for illustration.

And this scales massively when you have hundreds or thousands of rows.

Beyond Cost: TOON Improves LLM Accuracy

Using TOON is not just about token reduction. It directly improves model comprehension and reasoning.

LLMs get confused by JSON for three main reasons:

A. JSON Creates “Structured Noise”

When you provide JSON to an LLM, it has to do two jobs:

- Validate the structure

- Parse the actual data

But JSON’s structure includes tons of extra tokens:

- {} and []

- repeated keys

- quotes

- nested structures

These extra tokens distract the model from the content that matters.

B. TOON Increases Signal-to-Noise Ratio

TOON removes the noise and gives the model the important part:

the values.

This makes LLM parsing:

- Faster

- More accurate

- Less error-prone

- More consistent

In tests, LLMs produce significantly higher accuracy when extracting or transforming TOON vs JSON data.

C. Better RAG (Retrieval-Augmented Generation)

TOON is extremely powerful for RAG pipelines.

Why?

Because you can fit more documents inside the same context window.

JSON RAG Chunk

(40% wasted on structure)

TOON RAG Chunk

(Only 5–8% structural overhead)

This means:

- Your prompts can include more knowledge

- Your LLM sees denser information

- Your retrieval is far more accurate

This is a direct performance boost.

When to Use TOON and When to Stick With JSON

Let’s be practical. TOON is not for everything.

Here’s the correct strategy:

A. When You Should always Use TOON format

Use TOON format when:

- Arrays of identical objects

- Product lists

- Financial transactions

- User lists

- Chat history

- Vector store / RAG results

- LLM → LLM data transfer

- Prompting with structured output requirements

If it looks like a table → TOON wins.

B. When You Should Stick to JSON format

JSON format is still perfect for:

- Complex, deeply nested data

- APIs and server communication

- Configuration files

- Storage and databases

- Small payloads where token savings are negligible

TOON is not a general-purpose replacement.

Real-World Use Cases

E-Commerce Product Catalogs

Converting product lists from JSON to TOON saves tokens while sending large inventories to AI models for recommendation, summarization, or data enrichment.

User Data Lists

For customer support or CRM systems, converting user arrays into TOON format reduces token bloat, enabling faster LLM responses and improved analytics.

Financial Transactions

Massive transaction logs can be compressed into TOON tables. This saves costs while ensuring precise structured data is available for AI fraud detection or reporting.

Chat History

Chatbots and conversation analytics benefit from json to toon converter online by representing dialogue logs in TOON format, reducing token usage while preserving structure.

Can TOON Be Used for API Communication?

TOON can be used for API communication, but with a few practical considerations. Since TOON is a human-readable, indentation-based data format designed to reduce token usage for LLMs, it isn’t a drop-in replacement for JSON in traditional APIs. However, it can play a valuable role depending on how your system is structured.

C. The Hybrid Strategy (Best Practice)

This is the most powerful modern AI architecture:

1. Application Layer (JSON)

Your databases, APIs, and services stay in JSON.

2. Conversion Layer

Right before sending data to an LLM:

- Convert JSON toTOON

- Send TOON to the model

- Save tokens and increase accuracy

3. LLM Instruction Layer

Tell the model:

The following data is in TOON format. The first line is the header.

4. LLM Output Layer

You can even ask the model to output back in TOON for consistency.

This hybrid approach gives you:

- JSON stability + ecosystem

- TOON efficiency + performance

Best of both worlds.

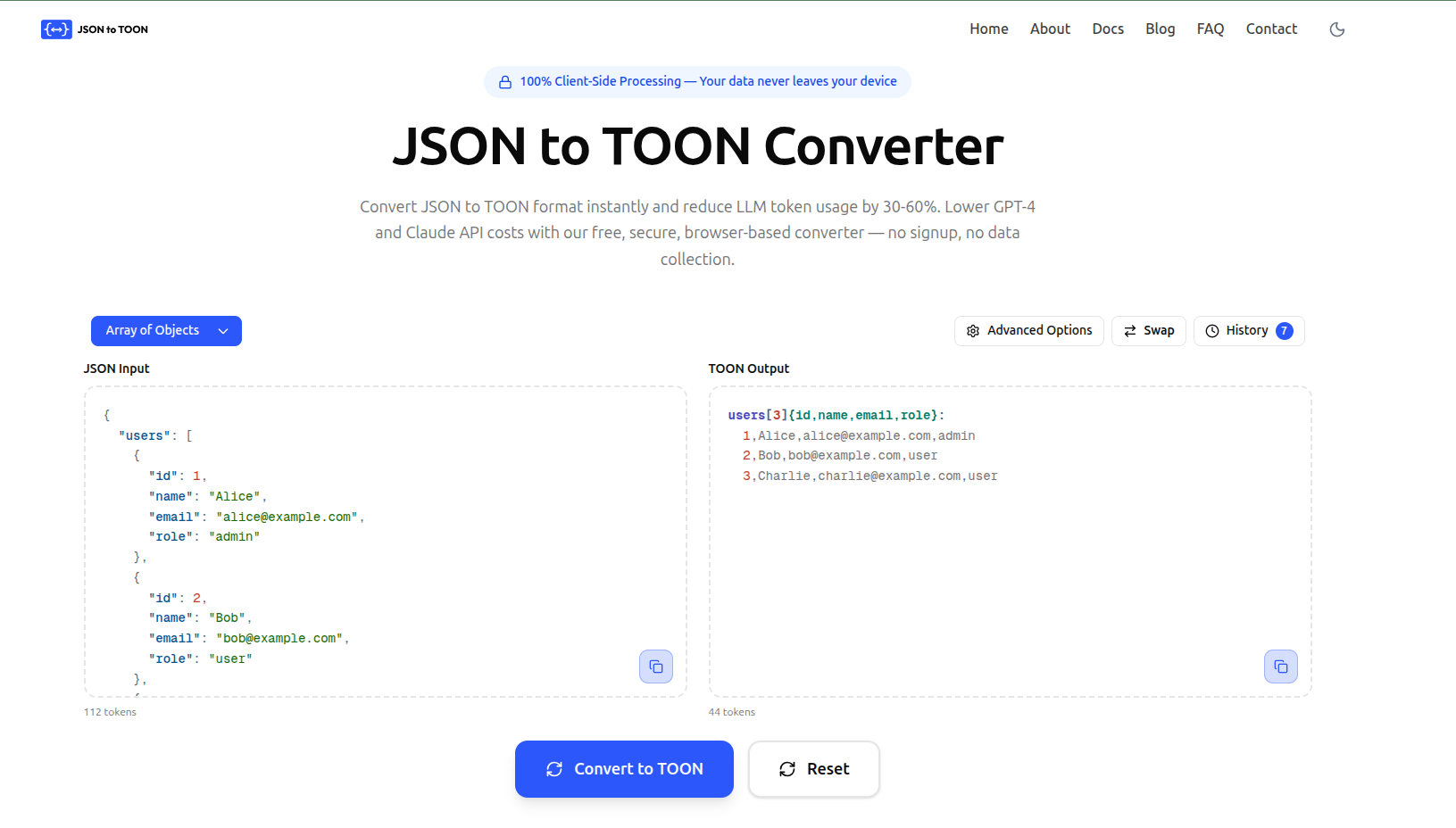

How To Use a JSON to TOON Converter Online (Step-by-Step)

You can convert automatically using these steps:

- Paste JSON — Paste your JSON into the input area or click Load Example to auto-fill a sample.

- Customize output — Choose a delimiter (comma, tab, pipe), set indentation, and toggle length markers.

- View TOON output — TOON appears instantly as you type; verify structure.

- Copy or download — Click Copy to copy to clipboard or Download to save as a .txt file.

This is what the TOON output looks like after converting JSON using JSON to TOON

You’re now ready to use the TOON data in your LLM prompts or AI workflows.

Conclusion: Try TOON Now and Instantly Reduce Token Cost

Tokens are too expensive to waste.

JSON’s verbose structure introduces:

- token bloat

- increased cost

- reduced accuracy

- lost context window

TOON gives you:

- Substantial token savings

- better model comprehension

- faster responses

- more room for instructions

- higher accuracy on extraction tasks

TOON is not here to replace JSON universally—but it is the right tool for the LLM era.

If you handle large structured prompts, you’re leaving money and performance on the table by sticking to JSON.

Try JSON to TOON converter Now and Instantly Reduce Token Costs

Take this quick test:

- Export a large JSON array from your app.

- Convert it using a JSON to TOON converter online.

- Compare token counts for the JSON vs TOON output.

- Evaluate both through your LLM.

You should see:

- Lower token usage

- Faster inference

- Better parsing accuracy

FAQs

1. What is Json to TOON and how does it work?

Json to toon is a tool to convert Jason data into TOON (token oriented object notation). It removes extra syntax like braces, brackets, and unneeded quotes. Keeping the structure intact through indentation. The converter uses a deterministic algorithm for consistent results. All processing happens locally in your browser for maximum speed and security.

2. How much can I save by using a JSON to TOON converter?

Benchmarks confirm that a JSON-to-TOON conversion can cut token usage by 30–60%, especially for complex JSON structures.

3. Can LLMs understand TOON easily?

Yes. LLMs read TOON more easily than JSON because it has less “noise” and more meaningful signal.

Is the JSON to TOON converter free to use?

Yes, our JSON to TOON converter is completely free with no limitations. You can convert unlimited JSON data without signup or subscription. The tool runs entirely in your browser, so there are no server costs or rate limits. Is TOON safe for sensitive data?

Yes. It’s purely a formatting style—no security risks.

6. Does TOON replace JSON completely?

No. JSON remains essential for APIs, storage, and complex data. TOON is a prompt optimization format, not a universal replacement.

7. Do LLMs understand TOON format from a JSON to TOON converter?

All major LLMs—GPT-4, Claude, Gemini, and more—fully support TOON output from our JSON-to-TOON converter. With its clean, YAML-like syntax, TOON is easy for models to process. Benchmarks show 86.6% accuracy with TOON vs 83.2% with JSON. Proving converter not only saves tokens but also enhances model performance. TOON works directly in prompts—no special instructions needed.